USB-C is the emerging standard for charging and transferring data. Right now, it’s included in devices like the newest laptops, phones, and tablets and—given time—it’ll spread to pretty much everything that currently uses the older, larger USB connector.

USB-C features a new, smaller connector shape that’s reversible so it’s easier to plug in. USB-C cables can carry significantly more power, so they can be used to charge larger devices like laptops. They also offer up to double the transfer speed of USB 3 at 10 Gbps. While connectors are not backwards compatible, the standards are, so adapters can be used with older devices.

Though the specifications for USB-C were first published in 2014, it’s really just in the last year that the technology has caught on. It’s now shaping up to be a real replacement for not only older USB standards, but also other standards like Thunderbolt and DisplayPort. Testing is even in the works to deliver a new USB audio standard using USB-C as a potential replacement for the 3.5mm audio jack. USB-C is closely intertwined with other new standards, as well—like USB 3.1 for faster speeds and USB Power Delivery for improved power-delivery over USB connections.

Type-C Features a New Connector Shape

USB Type-C has a new, tiny physical connector—roughly the size of a micro USB connector. The USB-C connector itself can support various exciting new USB standard like USB 3.1 and USB power delivery (USB PD).

The standard USB connector you’re most familiar with is USB Type-A. Even as we’ve moved from USB 1 to USB 2 and on to modern USB 3 devices, that connector has stayed the same. It’s as massive as ever, and it only plugs in one way (which is obviously never the way you try to plug it in the first time). But as devices became smaller and thinner, those massive USB ports just didn’t fit. This gave rise to lots of other USB connector shapes like the “micro” and “mini” connectors.

This awkward collection of differently-shaped connectors for different-size devices is finally coming to a close. USB Type-C offers a new connector standard that’s very small. It’s about a third the size of an old USB Type-A plug. This is a single connector standard that every device should be able to use. You’ll just need a single cable, whether you’re connecting an external hard drive to your laptop or charging your smartphone from a USB charger. That one tiny connector is small enough to fit into a super-thin mobile device, but also powerful enough to connect all the peripherals you want to your laptop. The cable itself has USB Type-C connectors at both ends—it’s all one connector.

USB-C provides plenty to like. It’s reversible, so you’ll no longer have to flip the connector around a minimum of three times looking for the correct orientation. It’s a single USB connector shape that all devices should adopt, so you won’t have to keep loads of different USB cables with different connector shapes for your various devices. And you’ll have no more massive ports taking up an unnecessary amount of room on ever-thinner devices.

USB Type-C ports can also support a variety of different protocols using “alternate modes,” which allows you to have adapters that can output HDMI, VGA, DisplayPort, or other types of connections from that single USB port. Apple’s USB-C Digital Multiport Adapter is a good example of this, offering an adapter that allows you to connect an HDMI, VGA, larger USB Type-A connectors, and smaller USB Type-C connector via a single port. The mess of USB, HDMI, DisplayPort, VGA, and power ports on typical laptops can be streamlined into a single type of port.

USB-C, USB PD, and Power Delivery

The USB PD specification is also closely intertwined with USB Type-C. Currently, a USB 2.0 connection provides up to 2.5 watts of power—enough to charge your phone or tablet, but that’s about it. The USB PD specification supported by USB-C ups this power delivery to 100 watts. It’s bi-directional, so a device can either send or receive power. And this power can be transferred at the same time the device is transmitting data across the connection. This kind of power delivery could even let you charge a laptop, which usually requires up to about 60 watts.

Apple's new MacBook and Google's new Chromebook Pixel both use their USB-C ports as their charging ports. USB-C could spell the end of all those proprietary laptop charging cables, with everything charging via a standard USB connection. You could even charge your laptop from one of those portable battery packs you charge your smartphones and other portable devices from today. You could plug your laptop into an external display connected to a power cable, and that external display would charge your laptop as you used it as an external display — all via the one little USB Type-C connection.

There is one catch, though—at least at the moment. Just because a device or cable supports USB-C does necessarily mean it also supports USB PD. So, you’ll need to make sure that the devices and cables you buy support both USB-C and USB PD.

USB-C, USB 3.1, and Transfer Rates

USB 3.1 is a new USB standard. USB 3‘s theoretical bandwidth is 5 Gbps, while USB 3.1’s is 10 Gbps. That’s double the bandwidth—as fast as a first-generation Thunderbolt connector.

USB Type-C isn’t the same thing as USB 3.1, though. USB Type-C is just a connector shape, and the underlying technology could just be USB 2 or USB 3.0. In fact, Nokia’s N1 Android tablet uses a USB Type-C connector, but underneath it’s all USB 2.0—not even USB 3.0. However, these technologies are closely related. When buying devices, you’ll just need to keep your eye on the details and make sure you’re buying devices (and cables) that support USB 3.1.

Backwards Compatability

The physical USB-C connector isn’t backwards compatible, but the underlying USB standard is. You can’t plug older USB devices into a modern, tiny USB-C port, nor can you connect a USB-C connector into an older, larger USB port. But that doesn’t mean you have to discard all your old peripherals. USB 3.1 is still backwards-compatible with older versions of USB, so you just need a physical adapter with a USB-C connector on one end and a larger, older-style USB port on the other end. You can then plug your older devices directly into a USB Type-C port.

Realistically, many computers will have both USB Type-C ports and larger USB Type-A ports for the immediate future—like Google’s Chromebook Pixel. You’ll be able to slowly transition from your old devices, getting new peripherals with USB Type-C connectors. Even if you get a computer with only USB Type-C ports, like Apple’s new MacBook, adapters and hubs will fill the gap.

USB Type-C is a worthy upgrade. It’s making waves on the newer MacBooks and some mobile devices, but it’s not an Apple- or mobile-only technology. As time goes on, USB-C will appear in more and more devices of all types. USB-C may even replace the Lightning connector on Apple’s iPhones and iPads one day. Lightning doesn’t have many advantages over USB Type-C besides being a proprietary standard Apple can charge licensing fees for. Imagine a day when your Android-using friends need a charge and you don’t have to give the sorrowful “Sorry, I’ve just got an iPhone charger” line!

Now Smallest Ultrabook GPD P2 Max has full function USB-C.

That means you might just need a HUB, then you can complete all you want. Charging, data transporting, RJ45, 4K decoding, anything just you can imaging.

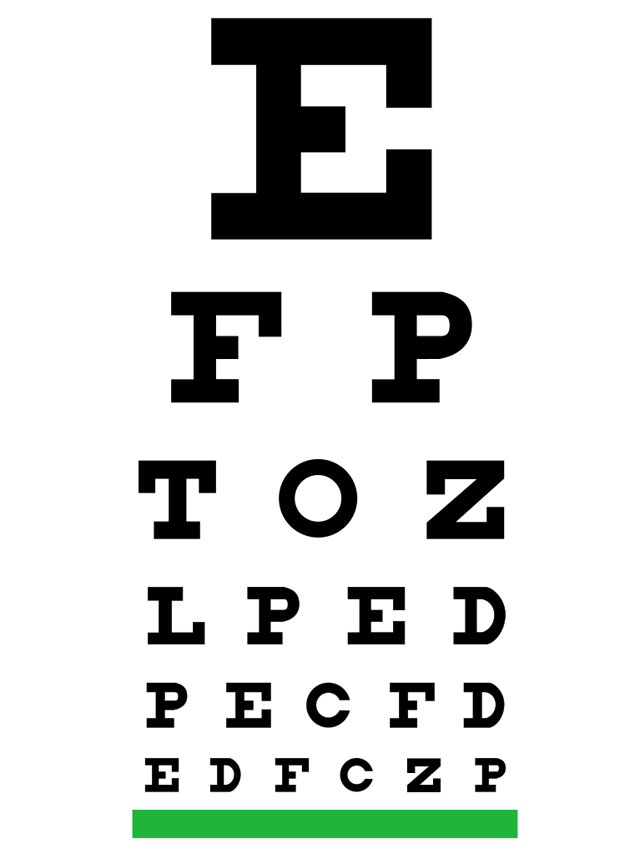

Let’s look at how human visual acuity is measured: we have all heard of “20/20 vision”, and it would make sense to think that it means “perfect” or “maximum” vision, but that’s not true at all. The 20/20 vision test comes from the Snellen chart (on the right), which was invented in 1860 as a mean to measure visual acuity for medical purpose. This is important because Snellen was trying to spot low-vision, which is a medical problem. No medical patient has ever complained of having above-average visual acuity.

Let’s look at how human visual acuity is measured: we have all heard of “20/20 vision”, and it would make sense to think that it means “perfect” or “maximum” vision, but that’s not true at all. The 20/20 vision test comes from the Snellen chart (on the right), which was invented in 1860 as a mean to measure visual acuity for medical purpose. This is important because Snellen was trying to spot low-vision, which is a medical problem. No medical patient has ever complained of having above-average visual acuity.